Overview of Aviatrix High-Performance Encryption

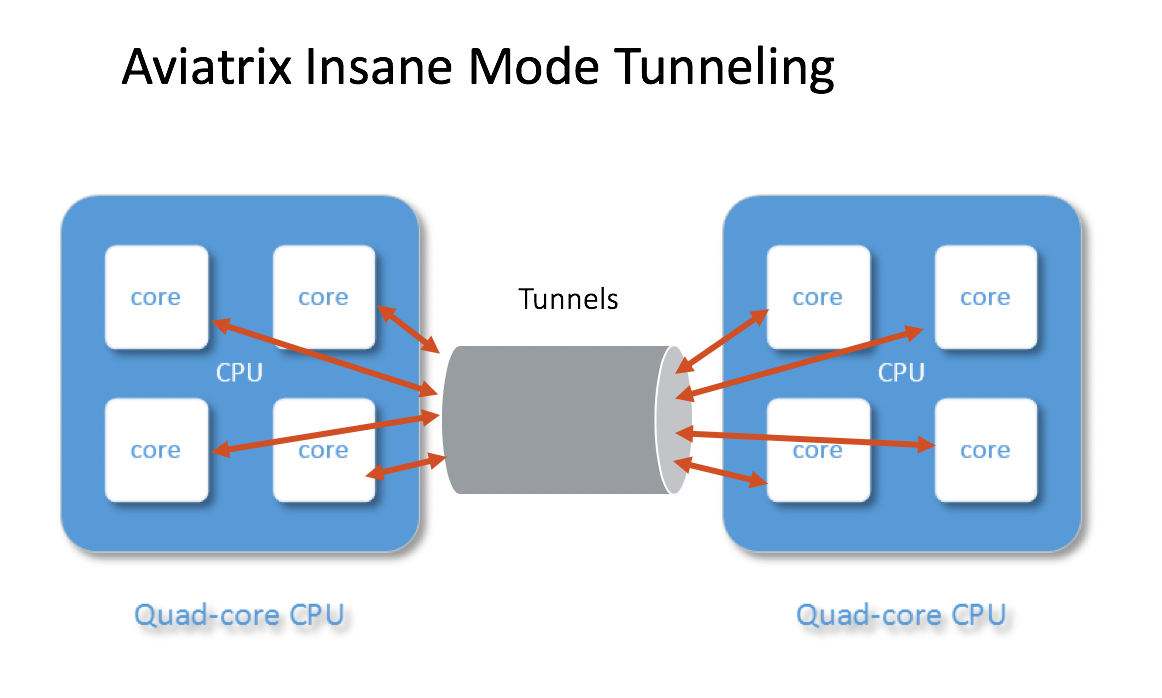

Aviatrix High Performance Encryption tunneling techniques establishes multiple tunnels between the two virtual routers, thus allowing all CPU cores to be used for performance scaling with the CPU resources, as shown below.

With Aviatrix High Performance Encryption Mode tunneling, IPsec encryption can achieve 10Gbps, 25Gbps and beyond, leveraging the multiple CPU cores in a single instance, VM or host.

| NAT over HPE is not supported. |

Why is Transit VPC/VNet performance capped at 1.25Gbps?

In the current Transit VPC/VNet solution, the throughput is capped at 1.25Gbps regardless if you have a 10Gbps connection between an on-prem network and the cloud (Direct Connect (DX)/ExpressRoute/FastConnect/InterConnect) link. The reason is that in the Transit VPC/VNet deployment there is an IPsec session between VGW/VPN Gateway and Transit Gateway and VGW/VPN Gateway has a performance limitation.

AWS VGW and other Cloud Service Providers' IPsec VPN solutions have a published performance cap of 1.25Gbps.

Most virtual routers or software-based routers are built with general purpose CPUs. Despite the vast CPU technology advancement, why doesn’t IPsec performance scale further?

It turns out the problem lies in the nature of tunneling, a common technique in networking to connect two endpoints.

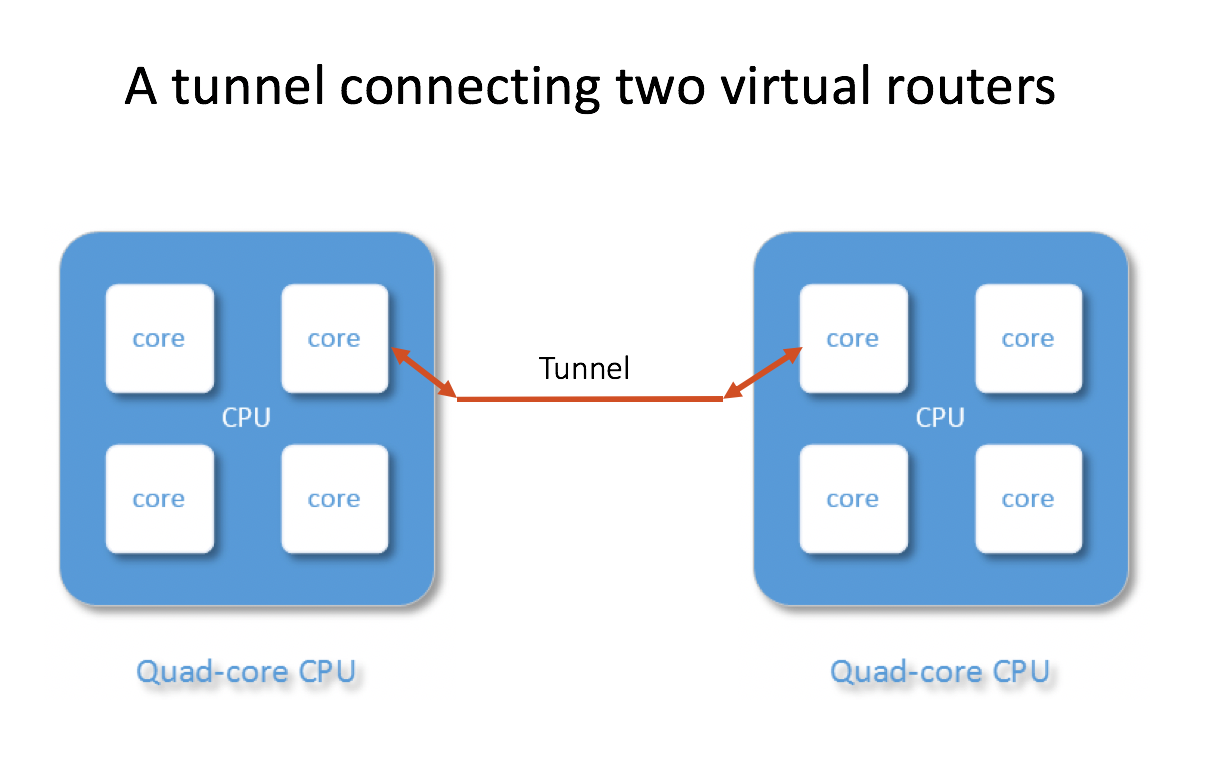

When two general purpose server or virtual machine-based routes are connected by an IPsec tunnel, there is one UDP or ESP session going between the two machines, as shown below.

In the above diagram, the virtual router has multiple CPU cores, but since there is only one tunnel established, the Ethernet Interface can only direct incoming packets to a single core, thus the performance is limited to one CPU core, regardless how many CPU cores and memory you provide.

This is true not only for IPsec, but also for all tunneling protocols, such as GRE and IPIP.

How does Aviatrix High Performance Encryption work?

When a gateway is launched with High Performance Encryption enabled, a new /26 public subnet is created where the High Performance Encryption Mode gateway is launched on.

Aviatrix High Performance Encryption builds high performance encryption tunnel over private network links. The private network links are Direct Connect (DX)/AWS Peering (PCX), Azure ExpressRoute, GCP FastConnect, and OCI InterConnect.

For High Performance Encryption between two gateways, between a Transit GW and a Spoke Gateway, or between a Transit GW and a Transit GW (Transit Peering), the Aviatrix Controller automatically creates the underlying peering connection and builds the tunnels over it.

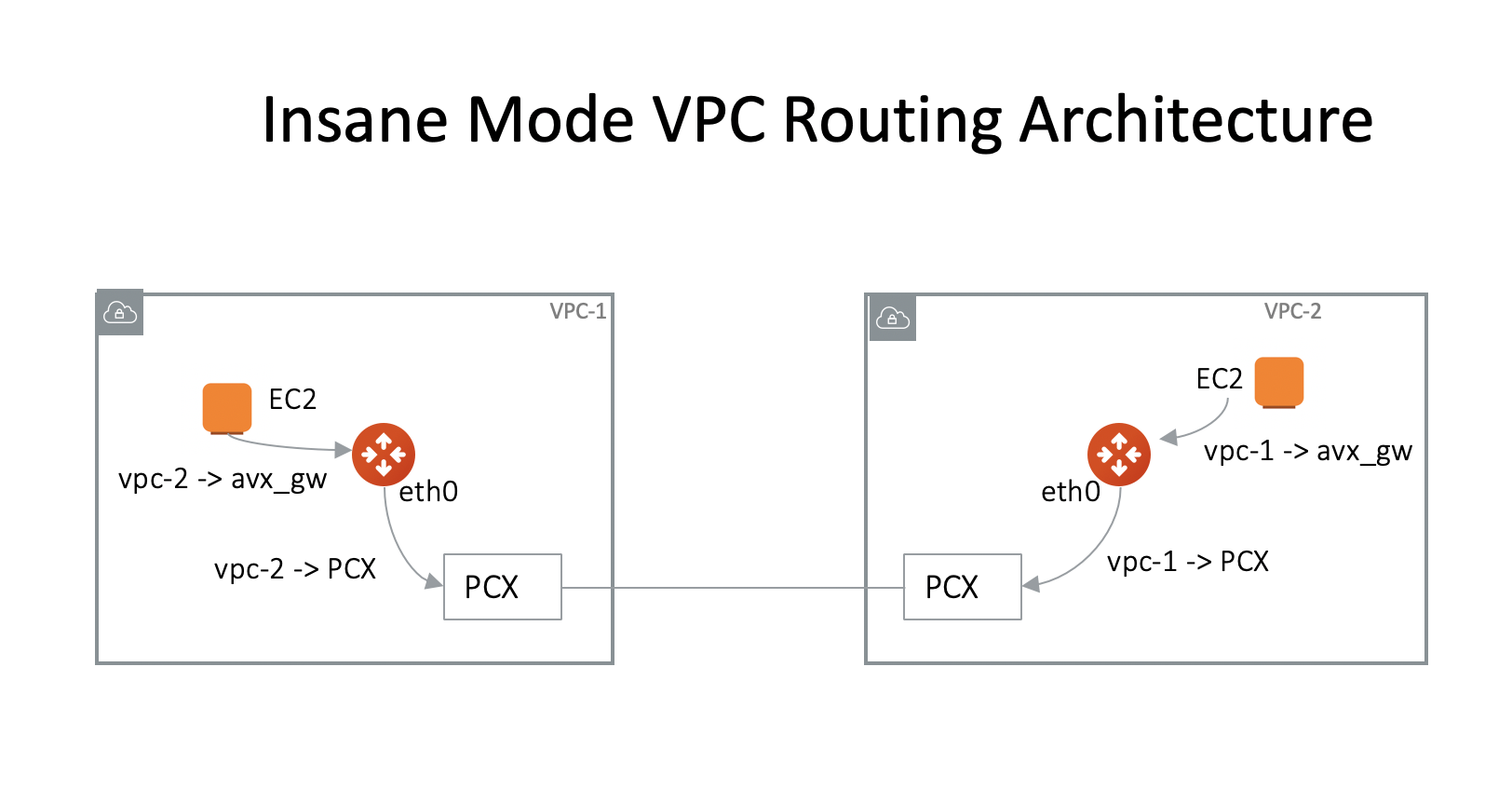

Since High Performance Encryption tunnels are over private network links, the VPC/VNet route architecture is described as below, where virtual machine (EC2/GCE/OC) instances associated route entry to the remote site point to Aviatrix Gateway, and the Aviatrix Gateway instance associated route entry to remote site points to PCX or VGW.

What are the use cases for High Performance Encryption?

-

High performance Encrypted Transit

-

High performance Encrypted Peering performance

-

High performance encryption over Direct Connect/ExpressRoute/FastConnect/InterConnect

-

Overcome VGW performance limit and 100 route limits

How can I deploy Aviatrix High Performance Encryption?

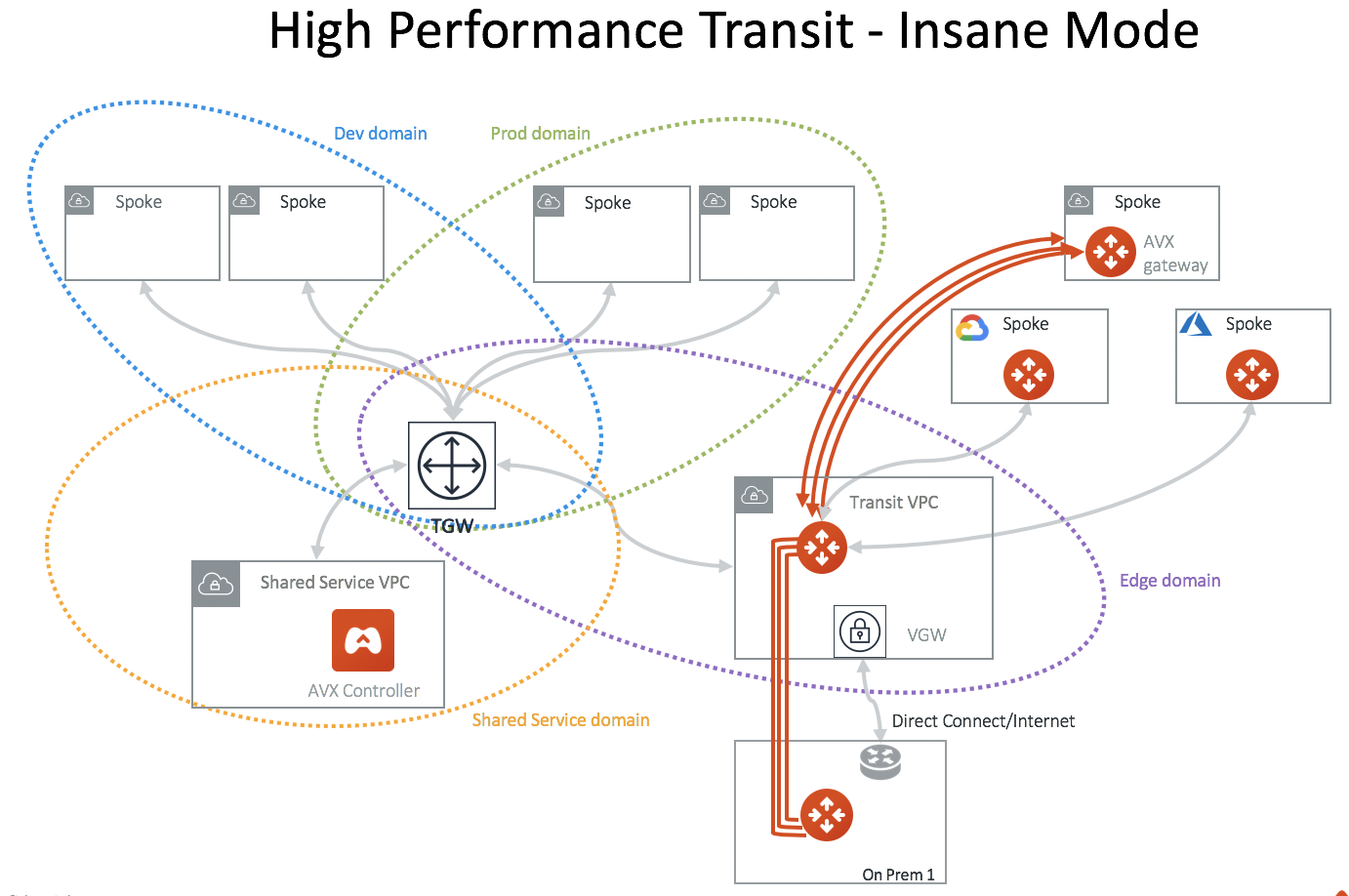

Aviatrix High Performance Encryption Mode is integrated into the Transit Network solution to provide 10Gbps performance between on-prem and Transit VPC/VNet with encryption. For VPC/VNet to VPC/VNet, High Performance Encryption Mode can achieve 25 - 30Gbps.

High Performance Encryption Mode can also be deployed in a flat (as opposed to Transit VPC/VNet) architecture for 10Gbps encryption.

The diagram below illustrates the high performance encryption between Transit VPC/VNet and on-prem, between Transit VPC/VNet and Spoke VPC/VNet.

High Performance Encryption Performance Benchmarks

Aviatrix High Performance Encryption is available on AWS, Azure, GCP, and OCI. For information about performance test results and how to tune your environment to get the best performance, see ActiveMesh HPE Performance Benchmark.