Using Aviatrix Site2Cloud Tunnels to Access VPC Endpoints in Different Regions

VPC Endpoints in AWS allow you to expose services to customers and partners over AWS PrivateLink. In situations where allowing resources to be accessed directly from the Internet is undesirable, VPC Endpoints can enable internal VPC connectivity to resources in other accounts.

One limitation of Endpoints is that it is a regional construct, meaning you can’t use it to provide connectivity to resources across regions. In some cases it’s not possible to move these workloads.

This is where Aviatrix can help overcome that limitation.

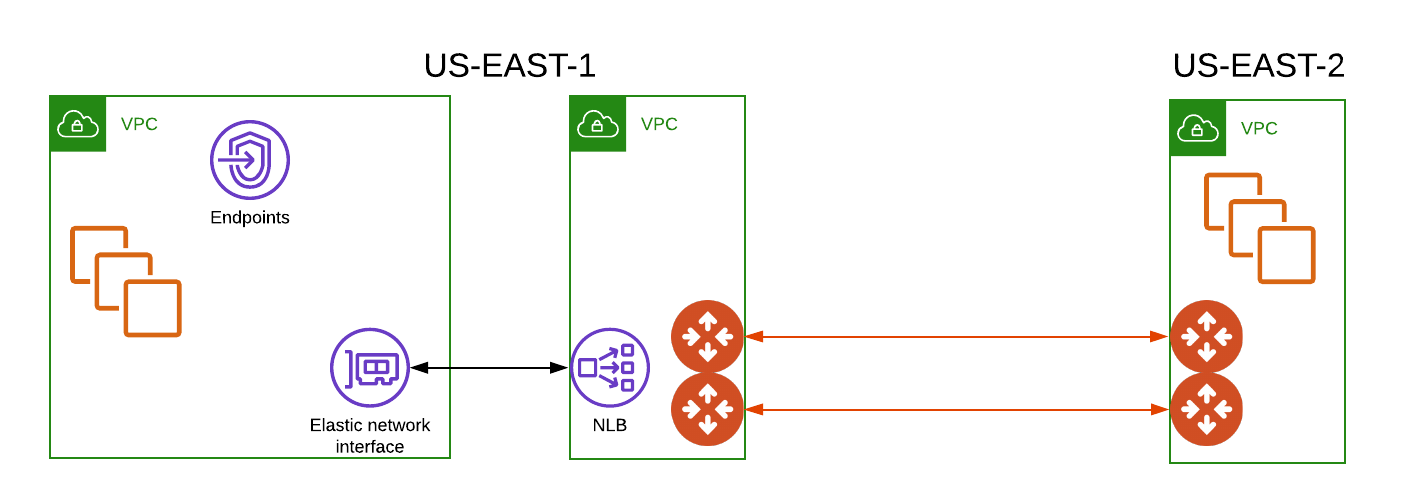

The end design will look similar to the diagram below.

Environment Requirements

In this example there are:

-

Two VPCs in US-East-1. One customer/partner VPC(10.10.10.0/24) with an Endpoint, and our VPC(10.10.11.0/24) with an Endpoint Service tied to an internal Load Balancer.

-

One VPC(10.10.12.0/24) in US-East-2 that hosts our workload.

-

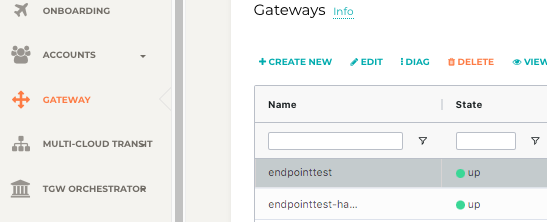

A set of Aviatrix Gateways, 2 in our VPC in US-East-1, and 2 in the workload VPC in US-East-2. Deploying a set of HA Gateways is documented here.

Once deployed, a set of Site2Cloud tunnels will be built. Documentation for building a tunnel between Aviatrix Gateways is here.

They should be built in an active-passive manner to avoid asymmetric routing in AWS.

Step 1: Deploy an internal Load Balancer in AWS

From the EC2 section in the console, choose Load Balancers.

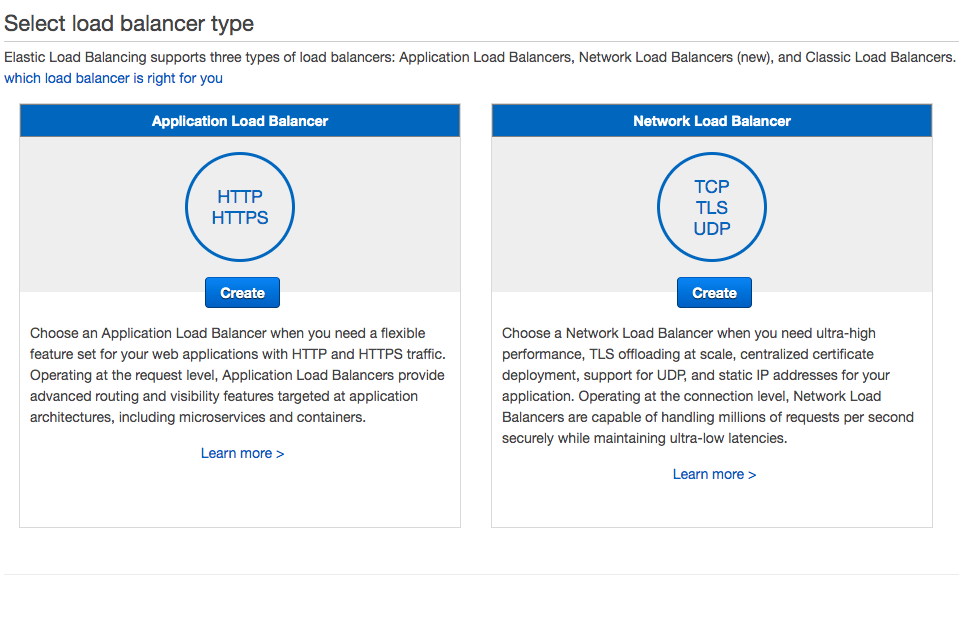

Choose Network Load Balancer

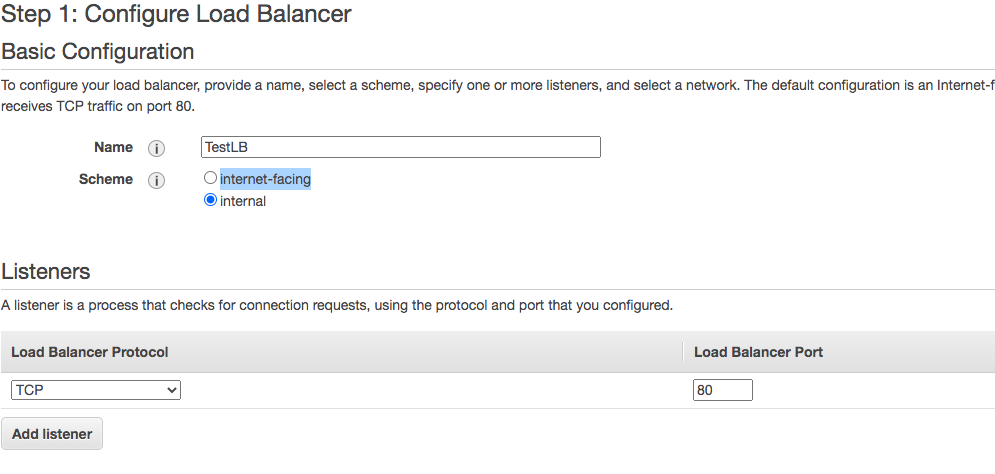

Give the LB a name, choose internal, program a listening port for your workload(80 for this test), and choose all availability zones in our US-East-1 VPC.

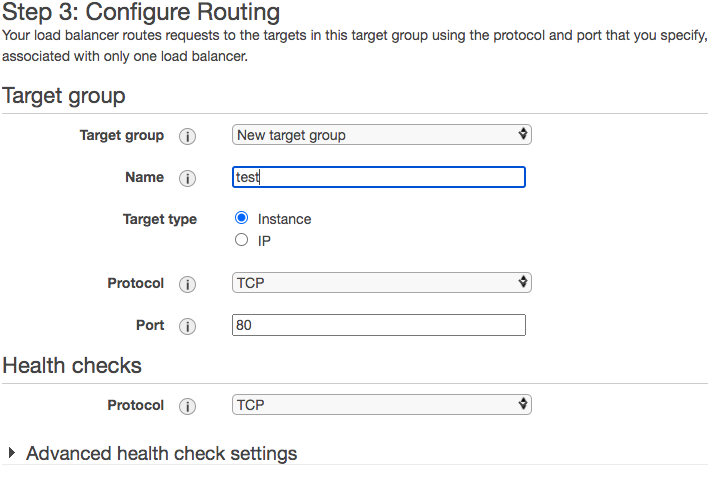

On the Routing section, create a new target group using our workload, port 80. Target type will be 'instance'. Health Checks will be TCP based.

In the Targets section, choose the Aviatrix Gateways in our US-East-1 VPC and move them to Registered Targets. Click Next to review, then Create.

Step 2: Attach an Endpoint Service to our new Load Balancer

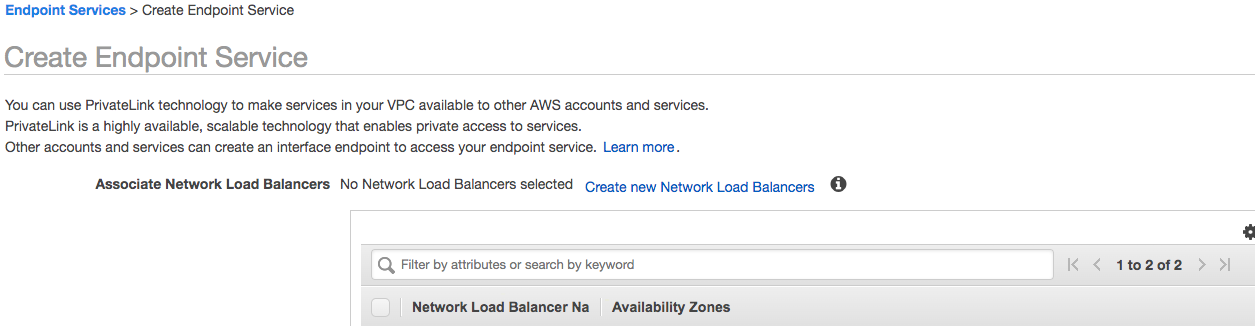

From the VPC section of the AWS console, choose Endpoint Services, then Create Endpoint Service.

The new Load Balancer will be in the list as an available NLB.

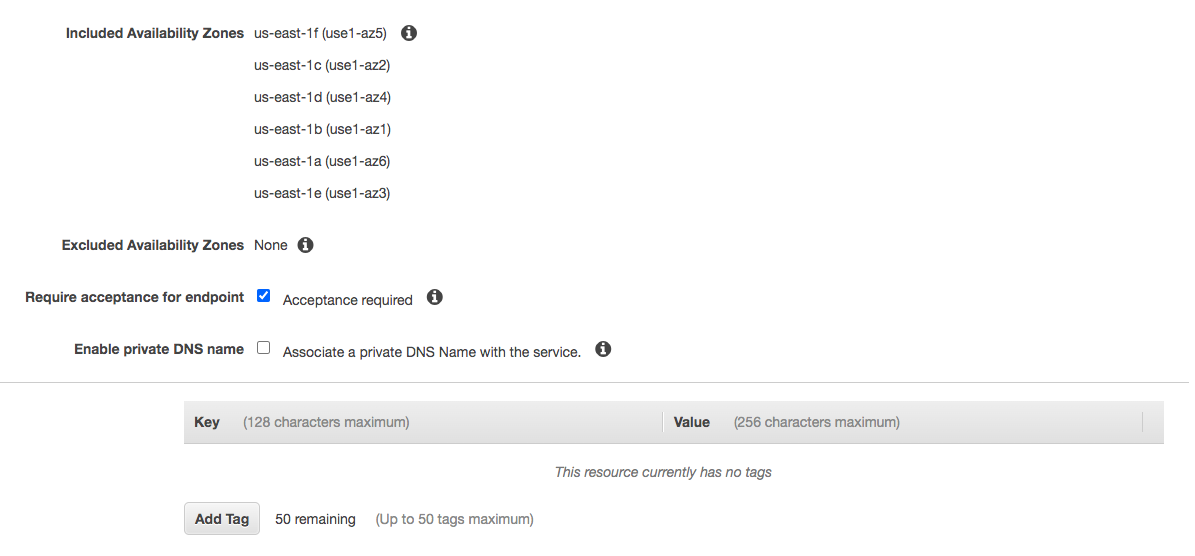

Update these options as needed. Create Service.

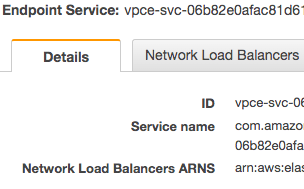

That Service ARN will be what our customer uses to register a service in their VPC.

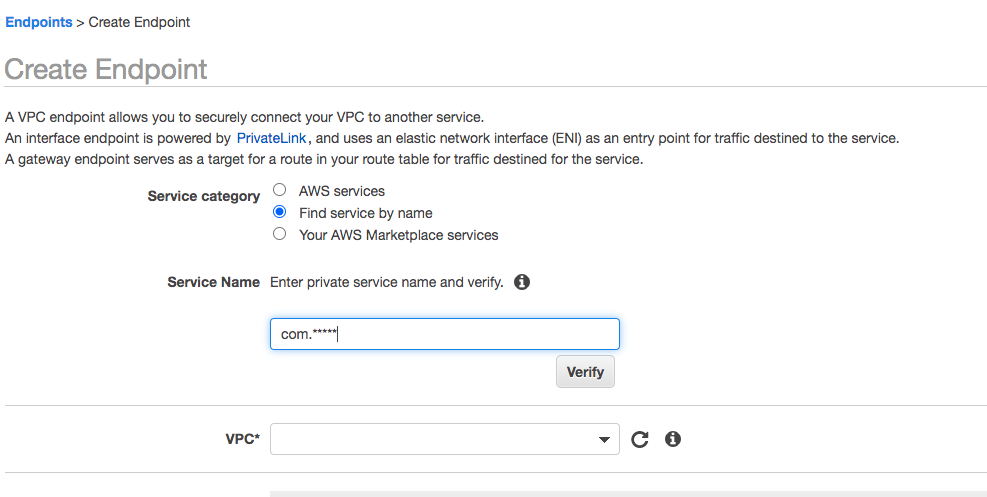

Step 3: Create Endpoint in Customer VPC

In the Customer VPC console, build a new Endpoint.

Enter the ARN from the last step, and choose the Customer VPC to expose an endpoint in. Once built, the Endpoint DNS names can be used to route traffic.

Step 4: Configure Destination NAT rules on Aviatrix Gateway

A Destination NAT(DNAT) rule sends traffic from our VPC in US-East-1 to the workload VPC in US-East-2.

On the controller, highlight the primary gateway deployed in our US-East-1 VPC. Click the Edit link.

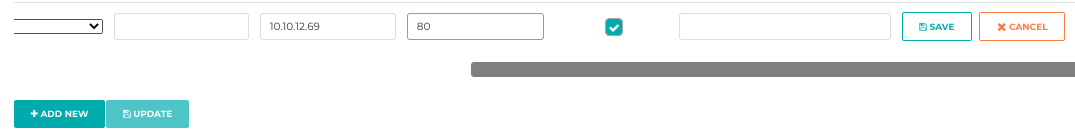

Scroll to the Destination NAT section and choose ADD NEW.

Ensure Sync to HA Gateway is selected.

Source CIDR will be the source of our US-East-1 VPC, 10.10.11.0/24. Destination CIDR will be the private IP of our Primary Gateway. In our example 10.10.11.5/32. Destination port in our example is 80. Protocol TCP. Connection is None. DNAT IPS in our example will be in the workload VPC available across our Site2Cloud tunnel. The server is 10.10.12.69. DNAT PORT is 80.

Once filled out, hit SAVE, then UPDATE.

Repeat this step in a second rule, updating the Destination CIDR to point to the private IP of the HA Gateway.

Step 5: Test connections

Ensure health checks on your Internal Load Balancer are healthy. Network Security Groups on your workload VPC(10.10.12.0/24) allow traffic from our VPC in US-East-1(10.10.11.0/24)

Only 1 tunnel will be active in our scenario, and Aviatrix will update the route tables to point to the active tunnel.

A simple way to test connectivity is to edit the /etc/hosts file on a linux instance to point to one of the DNS entries from the Endpoint in the Customer VPC.